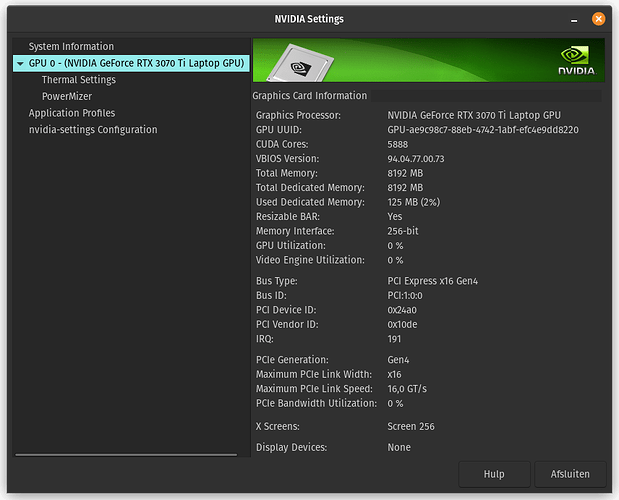

Ray Tracing started off as a gimmick, which nobody could use at the time of its release, as most folks were still using Nvidia GTX 1660 GPU's, and few had Nvidia RTX 20 series cards.

We learned years ago, if you want to use Ray Tracing, you better have an Nvidia 3080 or better, not even the 3060 could produce useful FPS with that on!

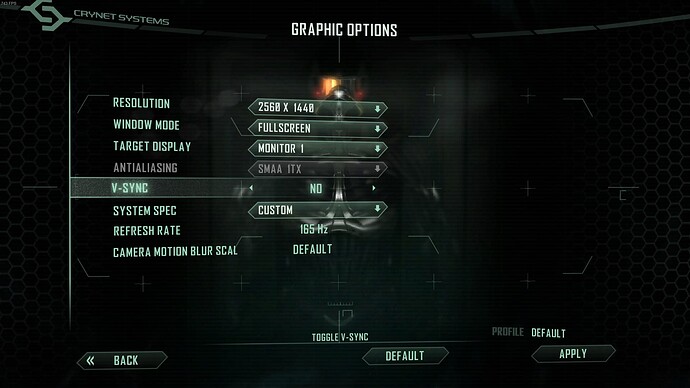

Now in the latest news, Ray Tracing is now coming in every game from now on, and you won't have an option to turn it off. I see it as their little trick, to force you to buy a new computer.

Cause as you know, we can't just make fun games anymore, games are now used to push users, to spend far too much money then they should, to buy new hardware, just to play a game.

I guess you could say, its always been that way. Having said that, gaming studios have never came off as scummy as they are today though! So, were living in a time where, you want to use all those fancy features in games? You better get that 4080/4090 5080/5090!

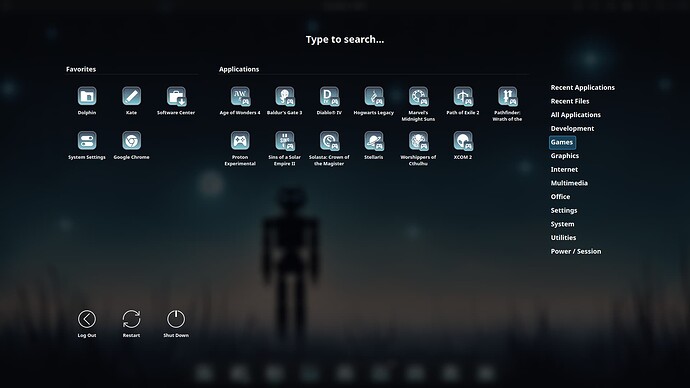

Or, the other options, is to switch to AMD, but their top cards don't even remotely compete against Nvidia's best offerings though. And Intel's Arc GPU's don't even remotely come close to offering an alternative for either of them.

Literally, a basic function that both Nvidia and AMD cards have, direct X 12, Intel can't even do it without black screening on people. So, Intel is still in diapers when it comes to GPU's, I don't even consider them an option.

Intel is like the toddler who is learning to walk still, thats how I see their GPU's. So, they have years to go yet, before they can even begin, to compete against Nvidia and AMD.