I recently noticed a significant difference in the speed and performance between Nautilus and du command-line tool when counting the number of items and the size of a folder.

Here’s the test I performed in the terminal:

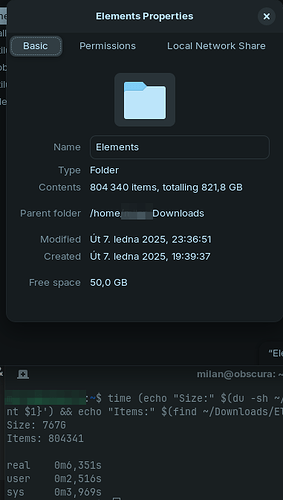

$ time (echo "Size:" $(du -sh ~/Downloads/Elements | awk '{print $1}') && echo "Items:" $(find ~/Downloads/Elements | wc -l))

Size: 767G

Items: 804341

real 0m6,351s

user 0m2,516s

sys 0m3,969s

Using this, the folder size was calculated as 767 GB and the total number of items as 804,341 in just about 6 seconds.

However, when using Nautilus, it took a staggering 2 minutes and 50 seconds to count the same number of files (off by 1) and calculate the folder size. The size difference is likely due to base-10 vs. base-2 reporting, but the slowness is what stands out the most.

Observations:

- Terminal tools like

duandfindare extremely efficient. - Nautilus takes a much longer time to process the same information.

- This discrepancy is surprising, especially when working with a large folder like this (800k+ items).

Has anyone else experienced such a lag in Nautilus? Is there any way to optimize its performance when working with directories containing a large number of items? Or is this simply a limitation of the graphical interface?